Today we're launching our new series of Relace models, which are state-of-the-art for code retrieval.

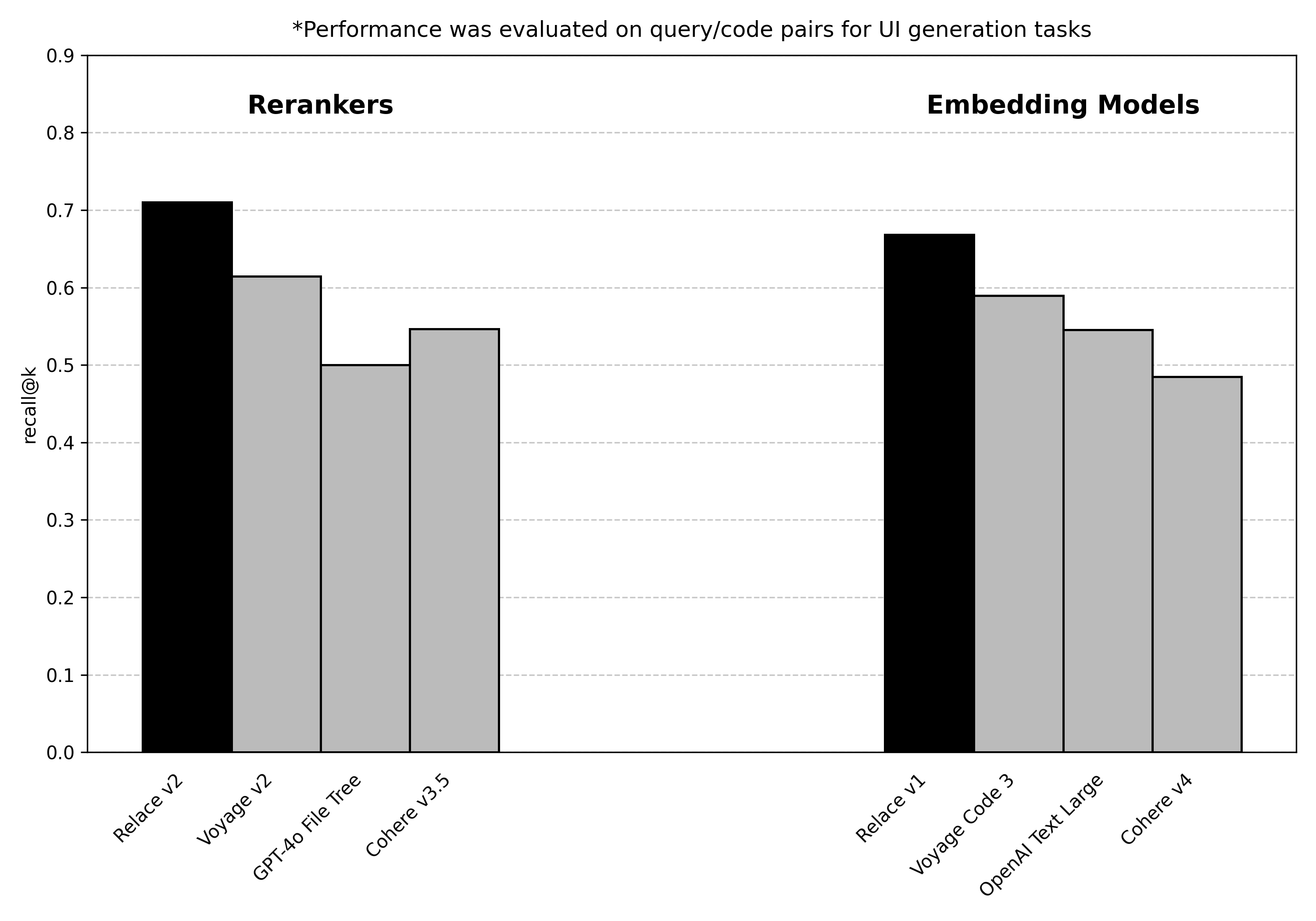

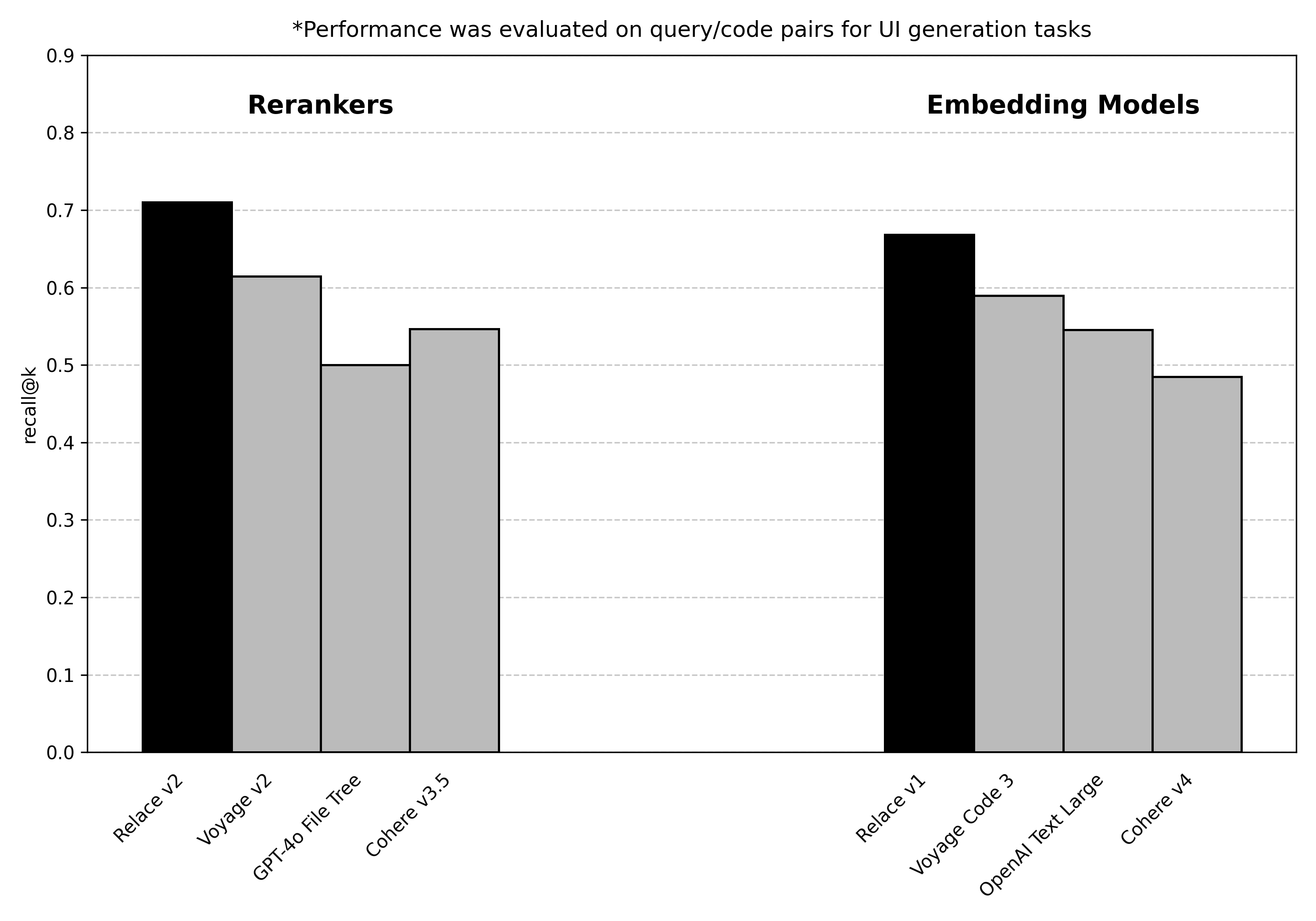

- Recall@k of 0.71 on UI generation tasks (next best is Voyage AI with 0.61)

- Drops input tokens used by 47%

Relace models are designed to slot naturally into most AI codegen products, making them faster, cheaper, and more reliable.

Instant Apply was about optimizing on output tokens. When you're editing an existing codebase, a lot of the code stays the same. Prompting frontier models to generate code snippets and merging them with Relace at >2500 tok/s allows you to separate concerns: only the difficult new sections of code are generated by the frontier model, and the context is filled in by a faster and more economical model.

Our new code embedding and reranker models are about optimization on input tokens. Given a user request for how to change a codebase, you want to retrieve only the files relevant to implementing that request. This is important for two reasons:

- Frontier model performance degrades steadily after ~50k tokens. Polluting the context window with irrelevant files makes the generated code worse.

- Input tokens are expensive. The fewer files you can get away with passing in, the more money you save.

A lot of the existing retrieval models on the market are optimized for generic RAG systems and don't perform that well on code. We trained our model on hundreds of thousands of user query & git commit + code pairs to make it best in class for AI codegen applications.

What defines a good code retrieval system?

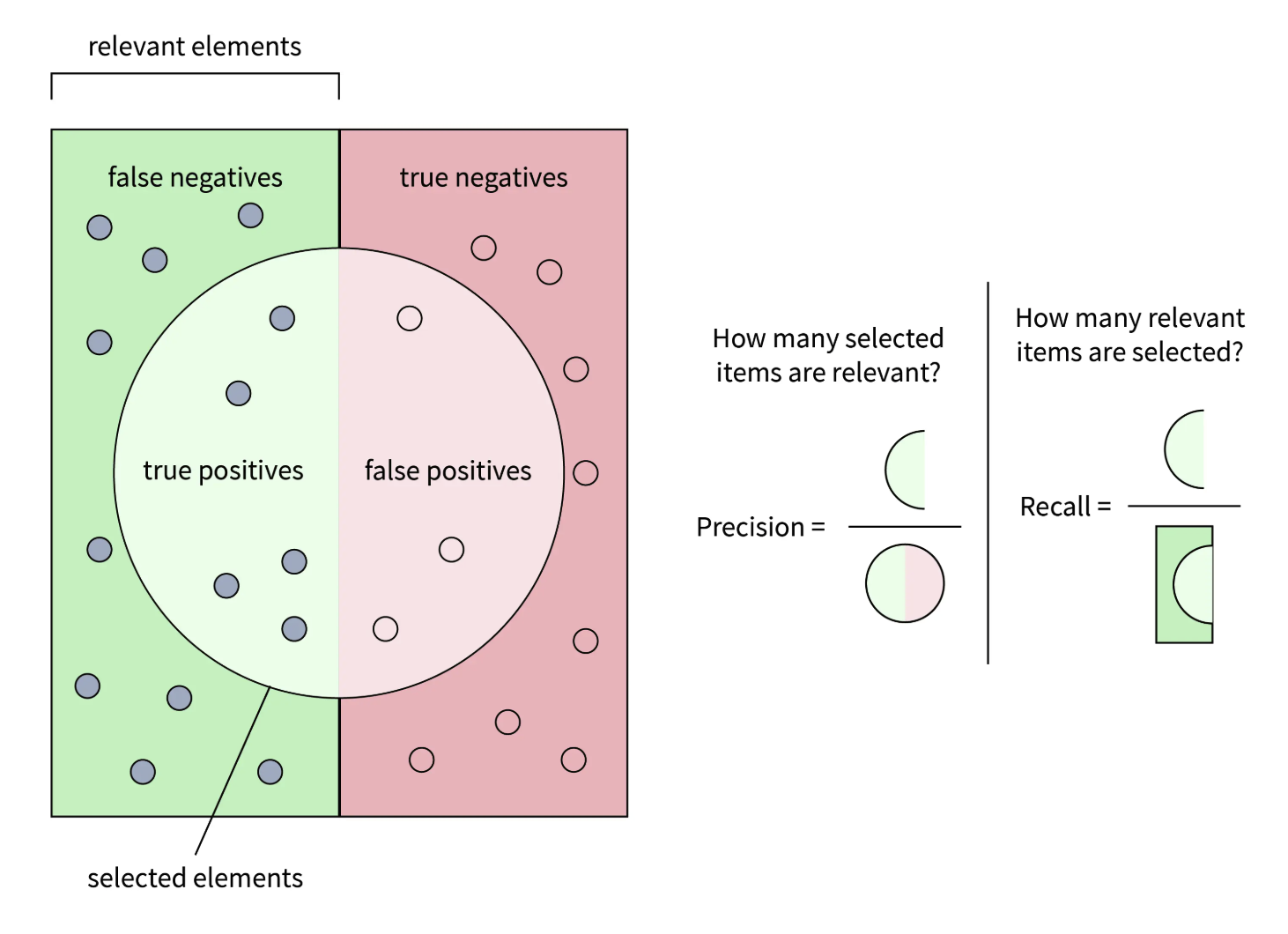

Code retrieval is just a binary classification problem — a file is either "relevant" or "irrelevant" to a user request.

This means you also have a binary set of errors:

- False positives (file is irrelevant and marked as relevant)

- False negatives (file is relevant and marked as irrelevant)

We determine "goodness" of retrieval systems by measuring the frequency of these error modes in aggregate on a benchmarking dataset. The two metrics are precision and recall. Precision is defined as the proportion of retrieved files that are actually relevant, and recall is defined as the proportion of relevant files that are actually retrieved.

For code retrieval, recall is the critical metric. It's much worse for a relevant file to not be passed into context at all than for an extra irrelevant file to be included. After all, in the absence of a retrieval system we would already passing every file (relevant or irrelevant) into context.

Thus, the best code retrieval system is one that minimizes irrelevant code tokens passed into context while maintaining near perfect recall.

What's the difference between embeddings and rerankers?

Embedding models and rerankers are both transformer-based large language models used to compare two inputs (in our case a query and a candidate code snippet). The difference is when the comparison happens.

The reranker takes the two inputs into its context window and directly outputs a similarity score between 0 and 1. The embedding model separately outputs a k-dimensional vector for each of the two inputs, and you get a score by computing the cosine similarity between the vectors.

Embeddings have the advantage that they can be precomputed for your codebase. When a user makes a request, you only need to calculate one new embedding on-the-fly (for the query), and you can compare it to all your code embeddings with an efficient vector similarity algorithm.

Rerankers require you to pass your entire codebase through the model in parallel for every user request, but they are also more accurate.

The optimal code retrieval system depends primarily on the size of the codebase your AI agent is working over.

For vibe-coding applications where the end user is non-technical, you can usually get away with just using the reranker. These codebases tend to be small and dynamic -- an embedding approach would require you to frequently recompute embeddings for a relatively large proportion of the codebase, and thus doesn't save much compute.

Agents working over large codebases change a much smaller proportion of the codebase, so sending the entire codebase to be ranked for every user query is impractical.

In these cases, it's better to do a two-stage retrieval. The trick is to use a fast form of retrieval (e.g., regex, ripgrep, or precomputed embeddings stored in a vector database) over the initial corpus of 1000+ documents, then pass the top 100 to a more accurate reranker.

Why use Relace?

We specifically trained our reranker and embeddings model to retrieve code files relevant to user queries.

Our reranker's recall@k is SOTA on a variety of benchmarks. For dynamic UI codebases, the Relace reranker would cut your input token bill in half compared to passing everything into context.

We've also optimized our deployment to achieve massive throughput on our models and rank entire codebases in ~1-2s. This is critical for realtime AI codegen applications, where high latency leads to significant user frustration.

You can access our new retrieval models along with instant apply on app.relace.ai.

We're already working with amazing companies like Lovable, MagicPatterns, Codebuff, Create, and Tempo Labs to make their workflows cheaper, faster, and more reliable.

Don't hesitate to reach out if you have any questions or need help with integration!